If your hands touch a keyboard for work, Artificial Intelligence is going to change your job in the next few years.

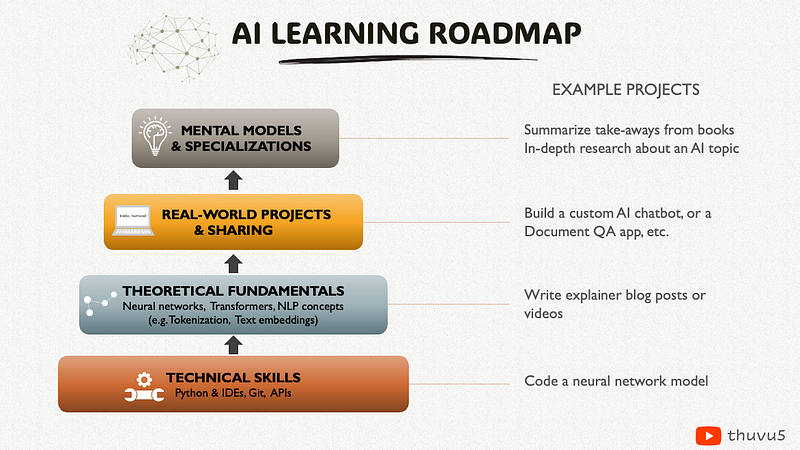

In this blog post, I’ll be sharing with you a roadmap for expanding your AI skillset, together with the learning resources.

This roadmap goes down to the basics, so even if you don’t have any background in machine learning, mathematics or programming, I hope you’ll walk away with some useful ideas of where to start.

👉 Note: You can also watch the video version of this blog post and download the full Roadmap PDF on my Youtube channel:

Now, let’s get started! 💪

Why Should You Learn AI?

Artificial Intelligence, Machine Learning and Deep Learning have been around since 1950s. This area has taken off in the last decade (and much in the recent years) due to advancements in algorithms, computing power and especially, the abundance of data.

The AI we often talk about today is Generative AI, which is a subset of Machine Learning and Deep Learning.

Generative AI can now write code, generate stunning images, writing music, diagnosing rare conditions, creating outline for presentation, reading images and much much more.

Companies around the world are racing to capture value from generative AI, trying to use it to create better services and products, improving processes and automating time-consuming tasks.

Large enterprises are rushing to implement AI solutions to solve their specific problems. This is a gold mine because everything is still very new. If you have the knowledge and know how to build things with AI, you can create huge impact.

As with anything in its early days, AI models still have many issues that need to be solved. They are not yet reliable or stable, they potentially possess biases among other things. That is why we need more people who have in-depth understanding and can get to the bottom of the technology to solve various problems. The knowledge can help you avoid a lot of misunderstanding and misinformation, such as “AI can do everything, as long as you got the right plugins!” 😅

The Roadmap

(1) Technical Aspects

>>> Python & IDEs:

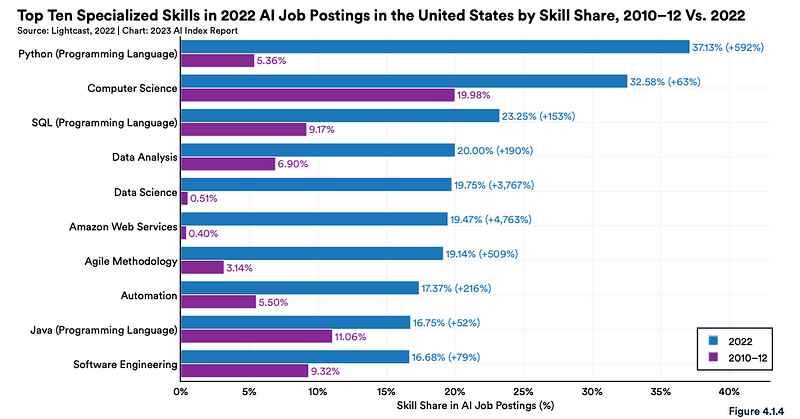

On the fundamental level, you want to learn the basics of programming. Python is one of — if not THE — most used languages for Machine Learning and Neural Networks, so some coding knowledge in Python would be essential.

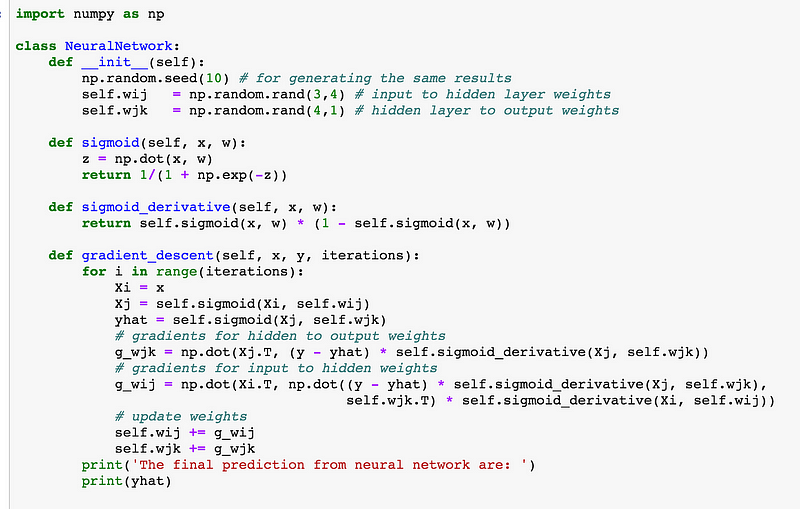

With Python, you can even code a simple neural network from scratch using Numpy library, which will help you understand how things work under the hood of a neural net.

With some basic understanding of programming in Python, you can read the source code of popular open-source Python libraries and get a good understand of how it works.

To work with Python, you can use several IDEs (Integrated Development Environments), such as VSCode, Pycharm or Jupyter Notebook. Jupyter notebook is my favourite environment to start learning Python, but you can use any of these IDEs. They are completely free!

If you have never used Python before, make sure to get yourself familiar with the 4 basics of Python:

- Data types (int, float, strings) and the operations you can do on them

- Data structures (lists, tuples, and dictionaries) and how to work with them

- Conditionals, loops, functions

- Object-oriented programming and using external libraries.

When learning Python, the most essential libraries to learn are:

- Numpy for computing and working with numerical data.

- Pandas for wrangling tabular data, or dataframes.

When you’re already familiar with those libraries, you can explore other libraries needed for your projects. For example, matplotlib libarary is useful for data visualuzation, spacy for basic text processing functionalities, and so on. For working with language models, Langchain is a very useful library to learn to develop multiple applications on top of LLMs.

What’s nice with Python is that there are many open-source libraries that you can use to develop almost anything you want.

>>> Git version control:

The next thing I’d recommend learning is Git version control.

Git is an open source software for tracking changes in your project.

Version control is essential when you’re collaborating with other people in a large or complex projects. There are actually just a few concepts you need to understand to start using Git, as shown in this diagram.

Many people actually confuse Git with GitHub. Github is a hosting platform for Git repositories, so that you can share your project with other people across the Internet. Meanwhile, Git is the software itself.

I think the easiest way to start using Git is to use Github Desktop, which is a user interface tool for Git. If you prefer to use command line/ terminal, you can use Git that way as well.

>> APIs

Another essential thing to learn is APIs (Application Programming Interface). Knowing how to use an API is a magical skill that opens up a whole new world of possibilities!

API is a way for computer programs to communicate with each other. Basically there are 2 jargons you need to learn:

- API request (which also referred to as “API call”), and;

- API response.

Every time you open Instagram and scroll down your Instagram feed, you’re basically making a request to the API of the recommender model behind the Instagram app, and getting a response in return.

Depending on the API, you can make a request for data, or for model predictions (just in the case of using OpenAI API) among other things. Without knowing how to use an API, you would be limited to the chat interface (for example the OpenAI website). The ChatGPT website a great way to use the ChatGPT model, but you cannot develop your own tool or integrate the ChatGPT/ GPT4 model into your current system. For this, you will need to use their model APIs.

👉 Useful resources:

- Python for Everybody Specialization (Coursera)

- Python Tips (Free online) — for references

- 📚 Git book

- 🎥 What is an API

- Deeplearning.ai short courses

(2) Theoretical Fundamentals

Okay, onto the next level — we are concerned with the theoretical fundamentals of AI. We will need to get some high-level theoretical understanding of AI and its subfields, such as machine learning, neural networks/ deep learning, computer vision, NLP, reinforcemenet learning. I’ll describe the main AI concepts for a technical learner:

- Basics of neural networks

- Neural network architectures, including Transformer architecture

- What it actually means to “train” a language model

- Some important NLP concepts such as “text embeddings”

It is totally up to you how deep you want to go into the theories. Sometimes, a high-level understanding is just what you need!

As shown in the diagram above, deep learning is a subset of Machine Learning. Traditional machine learning algorithms mostly fall into either supervised learning — this is when you actually have the target labels to train the prediction model on; or unsupervised learning when there are no target labels.

In general, these algorithms only work for tabular data, think of a data table with each record being a row and each data feature being a column in the table. It could be helpful to go over some Machine Learning jargons and get a high-level idea of popular machine learning algorithms.

However, since AI today uses Deep Learning, I think you can choose to jump straight into Deep Learning. You will likely learn the essential machine learning concepts along the way, and you may fill in the gaps in your understanding if needed.

>>> How Neural Network Works

Neural network is the algorithm behind deep learning and especially the Generative AI we see today. It works incredibly well for unstructured data like text and images.

For neural networks, you want to understand the main concepts such as:

- forward propagation

- back propagation

- activation functions

- gradient descent algorithm

- how weights are updated

It is helpful to understand how all the calculations in the neural networks work. They are really not too difficult to understand. So don’t shy away from it!

>>> Neural Network Architectures

A neural network, in itself, is pretty simple and maybe even a bit inferior from the mathematical point of view.

However, when you stack many network layers together into a certain complex architecture, surprisingly they could do amazing stuff, from recognizing digits, classifying cats and dogs, to pretty much anything you ask it to do in the case of LLMs (Large Language Models) nowadays.

Convolutional Neural Networks (CNNs) used to be very popular for deep learning with images because it can recognize patterns on images. Meanwhile, Recurrent Neural Networks (RNNs) used to be very popular for text modelling because it can understand sequences.

However, these architectures have quickly become obsolete since the invention of Transformers in 2017, which is the architecture behind large language models today.

Transformers outperform earlier models, so you might want to skip learning the earlier architectures if you don’t have time.

I’d recommend watching a few Youtube videos to get a high-level understanding, before you dive into its nitty gritty details. This video of Andrej Karpathy shows you how to code a GPT from scratch, if you want to dive into the details.

>>> Training a Language Model

As you work with the AI models, you also want to get yourself a high-level understanding of how the foundation models underlying them are trained.

For example, ChatGPT model from OpenAI uses GPT3 as thefoundation model, but it also goes through an extra training process, that is Reinforcement Learning with Human Feedback (RLHF).

I’d recommend watching this Introduction to LLMs video of Andrej Karpathy to understand more the process behind training an LLM.

>>> Embeddings & Vector Database

When working with language models, you might hear the common term: text embeddings. This is a useful concept to understand.

Text embedding is a numerical representation of text. It simply converts text into vectors of numbers.

We often forget that computers cannot actually understand language, but only numbers. So this conversion step is necessary.

There have been many embedding models created, with ever smarter ways to capture meanings into those vectors.

When you ask ChatGPT a question, under the hood your question will automatically be converted into a numeric vector that the ChatGPT model can understand.

The model will use this numeric vector to “calculate” the response. Under the hood, OpenAI also stores a vector database for your questions and the response, so that it can “remember” the conversation as you continue the conversation in ChatGPT.

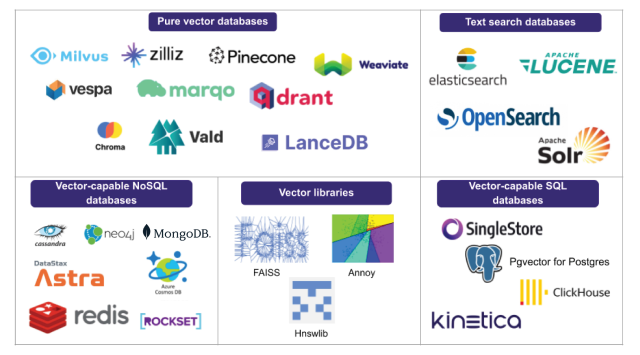

If you build an AI application with language models, you’ll need to create this vector database yourself, using a vector database of choice. Popular open-source vector databases include Chroma and FAISS, which you can simply install and use for your AI project in Python.

👉 Useful resources:

- 🎥 3Blue1Brown Neural Network playlist

- ⏩ Fast AI resources

- 🎥 CodeEmporium Transformers playlist

- 🤖 Deep Learning Specialization (Coursera/ Deeplearning.ai)

- 📚 Deep learning book (Ian Goodfellow and Yoshua Bengio and Aaron Courville)

- 💬 Natural Language Processing Specialization (Coursera/ Deeplearning.ai)

- 🎥 Let’s Build GPT from scratch (Andrej Karpathy)

(3) Build Real-world Projects & Share

No matter where you are in this journey, you can build relevant projects to get your hands dirty and experiment with things. This helps you connect the dots and challenge your understanding. If you are learning Python, you can build your first neural network in Python, using Keras or Tensorflow library. It’s basically a few lines of code! If it’s too high-level, you can try to write a neural network and implement gradient descent from scratch with Numpy!

If you are learning the theories, a real world project would be to pick up one specific concept you find interesting, and write a blog post or make a video on it. This helps yourself understand it deeper, and help other people too!

If you are ready to tackle more complex AI projects, you can build a real-world application. For example you can use Langchain to create a document retrieval app, basically, to create a ChatwithPDF kind of application where you can ask specific information in your document. Or you can create your own chatbot in a specialized domain topic.

No matter which project you do, big or small, make sure you document them for reference for future yourself, and share that with other people through articles and social media posts. You never know how many people might find it helpful!

👉 Example project:

🎥 Building a Chatbot with ChatGPT API and Reddit Data

(4) Mental models & Specialized AI areas

The next thing I’d recommend is to develop mental models around AI and specialize in a certain area within AI.

Reading books about AI is a great way to go through the noise on social media and get a more well-rounded background of AI. This is my current AI book list:

- Life 3.0 (Max Tegmark)

- Superintelligence (Nick Bostrom)

- The Coming Wave (Mustafa Suleyman, Michael Bhaskar)

- Human Compatible (Stuart Russell)

- The Alignment Problem (Brian Christian)

- Artificial Intelligence: A Modern Approach, Global Edition (Peter Norvig, Stuart Russell)

- I, Human: AI, Automation, and the Quest to Reclaim What Makes Us Unique (Tomas Chamorro-Premuzi)

This also equips you with the right frameworks and tools to reason and interpret things that you see or hear about AI today. You can find my AI book list in the roadmap.

Personally, I find it crazy how much important stuff around AI that is actually not talked about more widely on the meanstream media. There are many different areas around AI that don’t make headlines. For example:

- Advanced prompt engineering methods to improve quality of the LLM responses, like self-consistency, chain of thoughts prompting, or automatic prompting.

- AutoGen (Microsoft): A framework that allows you to develop LLM applications using multiple agents that can converse with each other to solve tasks.

- Advanced document QA (or retrieval augmented generation — RAG) with multi modal documents that can work well with complex tables, images and other data structures.

- AI security and hacking: The other day I watched a video of a researcher who uncovers some serious security issues with machine learning model. This is a very overlooked area until now, so if you have an expertise on Computer Security, please do humanity a favor and look into this! 🤯

- AI safety is the research area focusing on aligning AI’s goals with human goals. Understanding large AI models and knowing how to make them safe are increasingly a priority next to improving AI capabilities.

- And finally, AI regulations and AI governance if you are interested in laws. In Europe, EU AI Act is one of the big things that is coming to regulate the use of AI. Similarly the US government also recently passed the Executive Order on the Safe, Secure, and Trustworthy Development and Use of Artificial Intelligence (EO) to address the potential risks of AI.

It’s generally quite easy to find the information on these topics by reading books, research papers, articles and watching videos.

📩 Useful AI newsletters:

- The Batch (Deeplearning.ai)

- The Algorithm (MIT Technology Review)

- Paper with Code Newsletter

- AI Ethics Brief (Montreal AI Ethics Institute)

🚀 Specialized AI courses:

- AI Alignment Course — Developed with Richard Ngo (OpenAI)

- AI Governance Course

We are still in very early days of AI and we don’t know how things will turn out in the coming years. But one thing we know for sure is things are changing, faster and faster. The only way to keep up is to continuously learn.

I hope you found this post helpful. Any feedback or recommendations would be greatly appreciated!

Happy 2024 and beyond! 🚀

Disclaimer: Some links in this article are affiliate links. By using the links for qualified purchases you help support me at no cost for you.